Augmented Connection

Overview

Technology is more prevalent in the healthcare setting now than it has ever been, thanks to the increasing adoption of resources like Electronic Medical Records and machine learning algorithms for improving and validating diagnoses, so it's imperative that we begin to look towards the future of technology in the doctor's office. As they are becoming more equipped with computers, we are starting to lose out on doctor patient interactions, but with the help of augmented reality, we may be able to keep our doctors and patients engaged with one another while equipping them with the assistance they need. In an ever diversifying world, we also face the challenge of reducing the barriers between people who don't speak a common language, whether that be in a literal (e.g. English v.s. Spanish) or figurative (e.g. medical jargon) sense.

The Problem

There is a persistent gap in understanding between doctors and patients of different native languages, and medical terminology only widens this gap.

Users & Audience

Our primary focus was on two groups:

1. Patients, particularly those who understand little medical terminology and don't speak the same language as their doctor

2. Medical professionals treating patients who don't speak their native language

Roles & Responsibilities

I served as the primary interaction designer for this project, constructing prototypes of different degrees of fidelity and testing them to reveal their benefits and weaknesses. I also worked closely with the developers to help translate design decisions into a minimum viable product.

Scope & Constraints

This project was completed for a class called Ubiquitous Computing, and we were limited to working with a range of devices in augmented and virtual reality devices. We were also constrained by time, of which we had ten weeks to complete the project.

The Process

Needfinding

Interviews were conducted with two Navy Nurse Corps nurses in their mid fifties, as well as a Skilled Nursing Facility admissions director, age twenty-one. Initial research suggested concentration on one or all the follow categories:

1. Language barriers and deficits

2. Agitated, confused, and/or ignorant patients

3. Unresponsive patients

4. Confirmation between patients and healthcare providers that the proper service has been provided

With these insights, we began to ideate on possible solutions. Designs centered on an augmented reality (AR) display that could provide information like translations and definitions to patients and doctors, allowing for maintained eye contact while also providing a wealth of resources to be brought right into the wearer’s field of view

Low Fidelity Prototyping

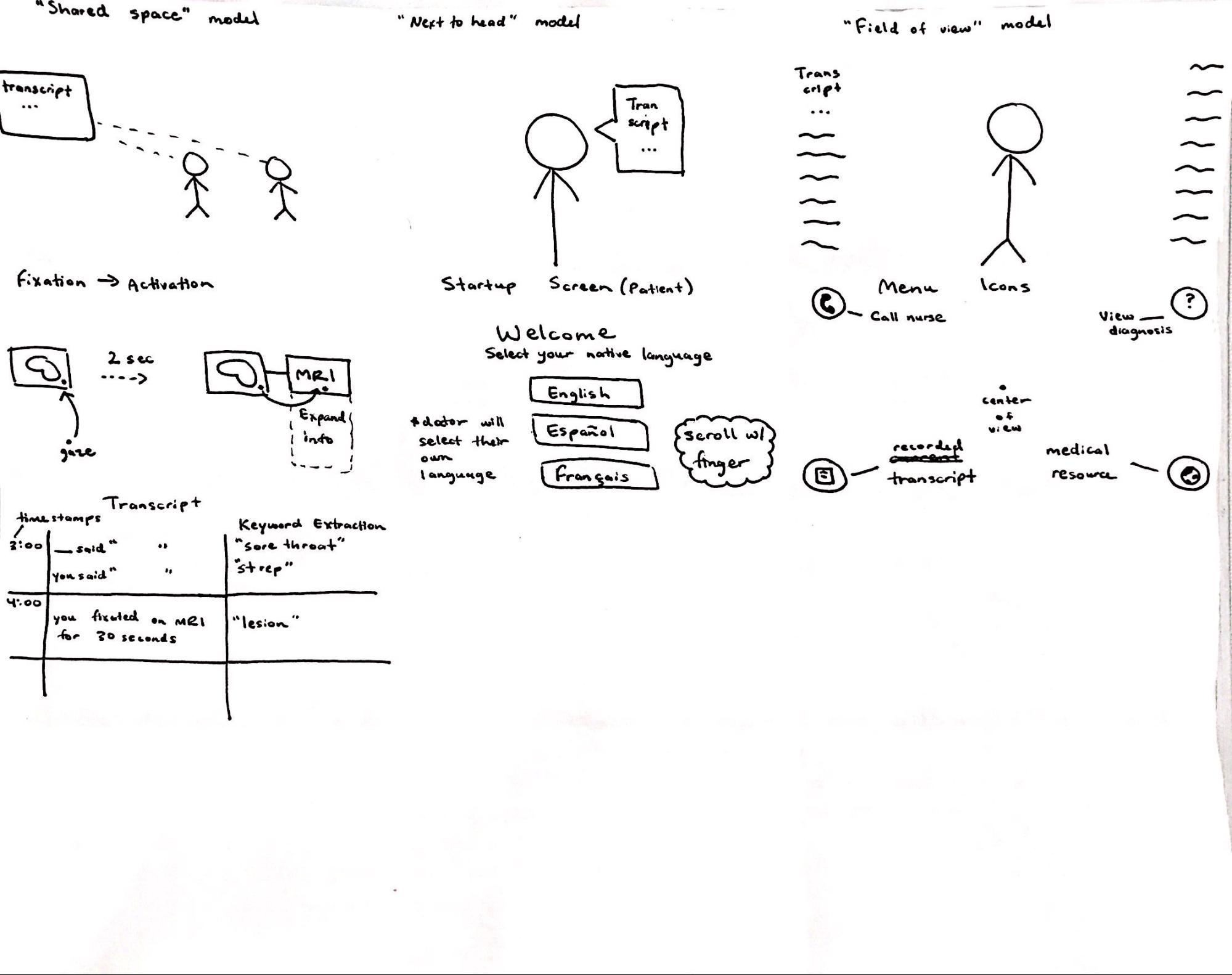

We began prototyping our AR environment with paper first. In combination with Wizard-of-Oz techniques, the team was able to role-play and evaluate the user's experience from both the doctor's and the patient's perspectives.

For example, when the patient selected "Keyword Simplification" by pointing at the button, the doctor would start talking about the patient's symptoms, at which time paper speech bubbles would appear beside them with the keywords they said, redefining them with simpler language, as shown below. If the patient pointed at "Full Translation Mode" instead, they would see everything that the doctor had said, translated into a specified language.

Storyboarding

In order to peer into the future of this product and gain insight into possible features and their uses, we sketched out storyboards and features on paper. These sketches allowed us to visualize our ideas and inspired future prototypes.

Medium Fidelity Prototyping

We began to prototype the application in augmented reality on the Microsoft Hololens, using a proprietary tool developed in UCSD’s Weibel Lab called PrototipAR. We were able to draw interface elements on an iPad, send them to a HoloLens, and manipulate them in space to simulate how a fully developed application might look and act. We established a base user interface of live translations and keyword descriptions. We found that it was rather non-intrusive to have text in the field of view, so we continued with the idea of peripheral transnational aides. We later moved prototype design and evaluation into a mock operating room inside of The Design Lab at UCSD. This environment allowed for a much more realistic prototyping session.

User Testing

We conducted numerous levels of user testing to produce our final prototype:

1. Within our team by having members play the roles of doctor or patient, following pre-written scripts

2. With a class TA, using Wizard of Oz methods

3. With a medical in-take professional and two nurses, to receive professional feedback on the viability and usability of our system

We found having full translations on screen to be a bit too overwhelming. Patients more often speak broken English and only have trouble with certain words that they would rather say in their native tongue. User testing allowed for our assumptions to surface, helping us to be more informed when building our prototypes and final design. It also offered a fresh perspective on the viability of our project, leading us to narrow our features down to what's truly necessary.

High Fidelity UI Mock-ups

As testing advanced, a higher fidelity prototype was produced using Figma. We built out a model that included all necessary features, specifically:

1. A shared transcript viewable in the native languages of the users

2. An emphasis on medical keywords derived from the conversation

3. Overlain descriptions of medical devices and scans, upon inspection

4. The ability to call for a nurse

5. General descriptions of location, time, etc.

This model provided points-of-view for both the doctor and the patient. The style is most similar to a heads-up display, in which text bubbles appear in the upper left, and the transcript and nurse button are available along the bottom of the field of view. Each emulated HoloLens can support its own language, native to the user. This interactive prototype was displayed alongside our final presentation.

Final Minimum Viable Product

The final design drew from the results of our testing, simplifying the user interface to a shared, billboard-style experience, giving both users a shared list of keywords extracted from the conversation, including symptoms, conditions, prescriptions, dosages, and diagnoses. This interface was found to be less intrusive, allowing for a more natural conversation to be held between the doctor and patient. The interface acts as a reference during the visit and a reviewable record after. This valuable information can prevent further misdiagnoses and failure to follow treatment instructions

Reflection

Future Work

There's much work that could be done to further improve this system. This is just an initial step in the right direction. We would like to do the following:

1. Use a more lightweight viewing device with less computational power, because we're using them only for display while a secondary computer acts as a hub to do the heavy lifting

2. Allow the patient to be able to see more detailed explanations of the parsed keywords by gazing at them for a prolonged time

3. Make the shared transcript movable to accommodate busy office spaces

4. Overlay information on the medical devices and images

Conclusion

We set out to develop a system that allows for a better understanding to be had between doctors and patients, and I believe we have taken a step in the right direction. Our system takes in vocal input of different languages and parses them to obtain the important medical terminology being used, then displays those keywords in the native languages of everyone able to see the shared transcript. This transcript serves as a reference for remembering what occurred during the visit, and allows both parties to better understand the other. Iteration and time are all that's necessary to get this product to market.